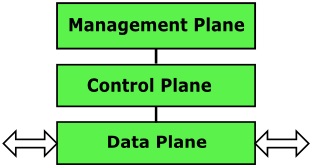

Switching Process

The performance characteristics of a switch are determined to a large extent by how packets are processed. The newer switches now use ASICs (Application Specific Integrated Circuits) for high speed hardware switching of packets. This results in much faster performance than packets processed in software on the route processor with multilayer switches and routers. While most of the packet forwarding occurs in ASICs, there is some network traffic such as encrypted packets that must be processed in software.

Data Plane

The switch data plane is where forwarding of packets occur. The forwarding of packets is done after a decision has been made at the Supervisor Engine PFC (Policy Feature Card) or line card DFC (Distributed Forwarding Card). The PFC and DFC modules forward packets in hardware ASICs. The PFC forwarding engine on the Supervisor Engine does Layer 2 and Layer 3 lookups before deciding how to forward the packet. Line cards with a DFC installed make Layer 2 and Layer 3 forwarding decisions at the line card and send the packet across the switch fabric to the destination line card. From there, the packet is forwarded out the specific switch egress port. Note that when describing the "processing and forwarding of packets" what that refers to is the switch or router examining Layer 2 and Layer 3 packet header information (addressing etc.) and rewriting the packet before forwarding it.

Control Plane

The switch has a control plane where specific network control packets are processed for managing network activity. The switch Supervisor Engine is comprised of a route processor on the MSFC (Multilayer Switch Feature Card) where the routing table is built. The route processor must handle certain control packets such as routing advertisements, keepalives, ICMP, ARP requests and packets destined to the local IP addresses of the router. In addition there is a switch processor that builds a CAM table for Layer 2 packet processing. The switch processor manages control packets such as BPDU, CDP, VTP, IGMP, PAgP, LACP and UDLD. The processing of control packets causes increased switch Supervisor Engine (CPU) utilization.

Management Plane

The management plane is where all network management traffic is processed. This includes packets from management protocols such as Telnet, SSH, SNMP, NTP and TFTP. The route processor also manages some of this traffic along with some control plane traffic. In addition the management plane manages the switch passwords and coordinates traffic between the management, control plane and data plane.

Traditional Fast Switching (Non-CEF [Cisco Express Forwarding])

Network switches without a PFC (Policy Feature Card) module for Layer 2 and Layer 3 hardware switching of packets still use the traditional fast switching of packets. The switch Layer 2 CAM (Content Addressable Memory) table is a list of all connected device MAC addresses with port assignment and VLAN membership. The switch processor derives the CAM table for Layer 2 lookups by flooding broadcast advertisements to all locally connected devices. Multilayer switches and routers have a route processor for Layer 3 lookups and building a routing table for packet routing decisions. The route processor as well builds an ARP table that is derived from the ARP protocol for resolving the MAC addresses of remote servers with their assigned IP address for Layer 3 forwarding.

The packet arrives at the ingress switch port and the frame is copied to memory. The network switch does a Layer 2 CAM table lookup of the destination MAC address. The multilayer switch or router does an ARP request if the MAC address is not in the ARP table.

Most often the network server is going to be on a remote subnet at the data center. The access switch will have to send an ARP request to the default gateway switch (distribution switch) for the server MAC address using the known IP address of the server. The IP address of the server is already obtained from a DNS server lookup when the session starts. The multilayer switch will update its ARP table, then update the CAM table and forward the frame out the switch uplink egress port. The actual neighbor switch where the packet is forwarded is typically the default gateway.

Note the network switch won't have to send any additional ARP request for that specific network server again unless the ARP entry has aged (expired). Any additional session connection by any desktop connected to that switch for that server would use the existing MAC address in the ARP table. The MSFC (route switch processor) on the Supervisor Engine does a routing lookup of the first packet in software. Any subsequent packets destined for that destination are forwarded using the fast switching cache.

Cisco Express Forwarding (CEF)

Routing of packets in software with the route processor is much slower and processor (CPU) intensive than hardware forwarding. Cisco Express Forwarding does the Layer 2 and Layer 3 switching packets in hardware. This feature is supported on most Cisco routers and multilayer switches for optimizing performance. The MSFC (Multilayer Switch Feature Card) route processor builds the routing table in software (control plane) and derives an optimized routing table called a FIB (Forwarding Information Base) from that. The FIB is comprised of a destination prefix and next hop address. The FIB is pushed to the PFC forwarding engine (data plane) and any DFC for switching of packets in hardware. The MSFC builds a Layer 2 adjacency table as well comprised of the next hop address and MAC address from the FIB table and ARP table. The adjacency table is pushed to the PFC and any DFC modules as well.

There is a pointer from the FIB table entry to a Layer 2 adjacency table entry for all necessary packet forwarding information. The rewriting of Layer 2 frame and Layer 3 packet information occurs before forwarding the packet to the switch port queue. The MSFC updates the routing table when any routing changes occur. The MSFC then updates the FIB and adjacency table and pushes those changes to PFC and DFC modules. There are some network services that cannot be hardware switched and as a result must be software switched with the route processor. Some examples include encapsulation, compression, encryption, access control lists and intrusion detection systems. It is key from a performance perspective to deploy routers with enough processing power, memory and scalability when implementing those network services. Large routing tables, ACL (Access Control List) packet inspection and queuing all contribute to CPU usage and should be minimized where possible.

CEF Load Balancing

CEF can load balance packets based on destination IP address. All packets to that specific destination are assigned a network path from all available equal cost paths. This is called per destination load balancing. Any packets going to a new destination are assigned a path from among available network paths to effect load balancing. The advantage of per destination load balancing is that packets arrive in order and distributed utilization of all paths and links occur. The newer Cisco IOS uses an algorithm with a 32 bit router specific value added to 4 bit hash at each router and multilayer switch.

That is used to generate a hash value and assign a unique network path. Note as well with two equal cost paths to the same destination, traffic would be equal load balancing packet distribution across both links. This occurs with dual gigabit uplinks between distribution and core switches for instance and between server farm switches and distribution switches.

CEF Switching Modes

Centralized Switching

The centralized switching model uses the Supervisor Engine for all Layer 2 and Layer 3 packet processing. The Supervisor Engine is comprised of a route switch processor (MSFC) for routing and a PFC forwarding engine for Layer 2 and Layer 3 packet forwarding. The centralized switching architecture uses switch line cards that do not have a PFC module onboard (not CEF enabled). The line cards require the Supervisor Engine for all packet processing. The onboard MSFC builds and maintains the routing table.

This is used by the MSFC to derive the FIB table. The FIB table is an optimized routing table with next hop IP address (route prefixes) and interfaces for hardware switching of packets. The Layer 2 adjacency table is similar to the CAM table with optimized Layer 2 forwarding information. This includes the next hop IP address and next hop MAC address. Both tables are used to perform hardware switching of packets. The FIB table and adjacency table are pushed from the MSFC to the Supervisor Engine PFC module for fast forwarding of all packets in hardware. The following describes the centralized switching model.

1. The packet arrives at the switch ingress port and is passed to the line card ASIC.

2. The fabric ASIC forwards the packet header over the shared bus to the Supervisor Engine. All line cards connected to the shared bus will see this header.

3. The Supervisor Engine forwards the packet header to the Layer 2 and Layer 3 forwarding engine (PFC) where it is copied to memory. The PFC forwarding engine does a CAM table lookup based on the device MAC address. The switch does an ARP request if the MAC address is not in the ARP table.

4. The Layer 2 forwarding engine then forwards the packet to the Layer 3 engine (PFC) for Layer 3 and Layer 4 processing which includes NetFlow, QoS, ACLs, Security ACLs, Layer 3 lookup (FIB Table) and Layer 2 Lookup (Adjacency Table). That information is used to do packet header rewrites before forwarding the packets.

5. The PFC on the Supervisor Engine then forwards the results of the Layer 2 and Layer 3 lookups back to the Supervisor Engine.

6. The Supervisor Engine forwards the result of the lookups over the shared bus to all connected line cards.

7. The source line card where the packet arrived sends the packet with payload data over the switch fabric to the destination line card.

8. The destination line card will receive the packet, rewrite the packet header and forward the data out the destination egress port.

Packet Rewrite

• Layer 2 Source and Destination MAC Address

• Layer 3 (IP) TTL

• Layer 3 (IP) CRC Checksum

• Layer 2 Ethernet/WAN Header CRC Checksum

Distributed CEF Switching Model (dCEF)

The distributed switching model distributes the processing of packets from the Supervisor Engine to switch line cards that are enabled with DFC modules. The Distributed Forwarding Card (DFC) is an onboard line card module with a PFC module for hardware based packet switching (Cisco Express Forwarding). Examples of DFC enabled line cards include the Cisco 6700 series and 6816 series line cards. There are some switch line cards that have an option for upgrading to a DFC module. The local processing of packets at the line card optimizes performance by offloading it from the Supervisor Engine. In addition latency is decreased by not having to forward packets across the switch fabric to the Supervisor Engine.

The Supervisor Engine MSFC still builds and maintains the routing table. The FIB table (Layer 3 lookups) on the Supervisor Engine is derived from the routing table and the adjacency table is derived from the FIB and ARP table. Those tables are pushed from the Supervisor Engine to the local DFC on each line card. There is still a 32 Gbps shared bus and traditional centralized switching available for classic line cards with no DFC module. Cisco 3750 series switches support distributed CEF as well when deployed as part of a stack. The 3750 master switch pushes the FIB and adjacency table to the member switches where CEF is supported. The following describes the distributed switching model.

1. The packet arrives at an ingress port on the line card and is forwarded to the line card ASIC.

2. The fabric ASIC forwards the packet header to the local line card DFC module.

3. The DFC module copies the packet header to memory. The DFC Layer 2 forwarding engine does a CAM table lookup based on the MAC address. The switch does an ARP request if the MAC address is not in the CAM table.

4. The DFC Layer 2 forwarding engine then forwards the packet to the DFC Layer 3 forwarding engine for Layer 3 lookup (FIB Table), Layer 2 lookup (Adjacency Table), and Layer 4 processing which includes NetFlow, QoS ACLs and security ACLs.

5. The results of the lookups are forwarded back to the fabric ASIC. The information from the lookups is used to do packet header rewrites before forwarding packets.

6. The fabric ASIC forwards the packet with payload data over the switch fabric to the destination line card if it isn't the local line card.

7. The destination line card receives the packet, rewrites the header and forwards it out the destination egress port.

Packet Rewrite

• Layer 2 Source and Destination MAC Address

• Layer 3 (IP) TTL

• Layer 3 (IP) CRC Checksum

• Layer 2 Ethernet/WAN Header CRC Checksum

Ethernet Standard

The most popular data link campus protocol running today is Ethernet. The primary Ethernet specifications in use today are Fast Ethernet (100Base-T), Gigabit (1000Base-T) and 10 Gigabit (10GBase-T). Most desktop network connectivity today is comprised of Fast Ethernet and Gigabit to the access layer switch. Most of the network server connectivity is still Gigabit while the distribution and core layer switches are migrating to 10 Gigabit speeds. The 100BaseT specification is 100 Mbps over unshielded twisted pair (UTP) category 5 cable. Gigabit Ethernet and 10 Gigabit has specifications for UTP, Fiber and STP cabling.

Ethernet uses CSMA/CD as the media contention method. That arbitrates access to the network switch in addition to dealing with collisions on half-duplex links. The newer Gigabit switch ports don't use CSMA/CD for desktop contention. Instead each desktop and switch port is a full-duplex separate collision domain. Some older links from desktop (or any device) to the switch are still half-duplex. As a result the connected devices use CSMA/CD and must wait for a specified and different length of time before attempting re-transmission. The maximum size of an Ethernet packet is 1518 bytes.

Optical Fiber Technologies

The current fiber standards for switch connectivity is Gigabit Ethernet and 10 Gigabit Ethernet with Multi-Mode Fiber (MMF) and Single Mode Fiber (SMF). Single Mode Fiber uses only one mode of light to travel across the fiber strand using a laser as the light source. Multimode Fiber allows for many modes of light to travel across the fiber strand at different angles using an LED as a light source. Modal dispersion results from different light modes being transmitted across a fiber strand. The result is that Single Mode Fiber supports much greater distances. Single Mode Fiber is supported with a 9 micron diameter fiber core. It uses long wave lasers to send data between devices that are up to 10 kilometers apart. Multi-Mode Fiber is supported with 62.5 micron and 50 micron diameter fibers. The 50-micron fiber will transport across longer distances than 62.5 micron fiber. Multimode Fiber uses Short Wave Lasers (SX) and Long Wave Lasers (LX) for Gigabit transmission.

Cisco Switches

Performance Characteristics

The Supervisor Engine is a key component of switch performance. The Supervisor Engine must be fabric enabled to optimize performance with line cards that are fabric enabled and have an onboard DFC. The CEF720 line cards have a 40 Gbps (full-duplex) fabric switch connection to the switch fabric. Line cards with DFC modules for local switching use less fabric bandwidth and switch packets faster. In addition, the DFC modules are upgradeable per line card with higher performance modules. Finally, the lower line card oversubscription (number of ports per ASIC) optimizes performance for Gigabit (GE) and 10 Gigabit (10 GE) Ethernet switch ports.

Fabric Switching Capacity

As mentioned the development of hardware (ASIC) based switching improves the performance of switches. The performance of a switch is usually described in terms of fabric switching capacity (Gbps) and line card forwarding rate (Mpps). The integrated switch fabric is a feature available with the newer Supervisor Engine 720 and Supervisor Engine 2T. Previous switch fabric modules (SFM) running at 256 Gbps were available for the Supervisor Engine 2.

The Supervisor Engine 720 switch fabric is a full-duplex 720 Gbps interconnected crossbar with discrete paths between all fabric enabled line cards and the Supervisor Engine.

The 32 Gbps shared bus is still available for older classic line cards deployed with the fabric enabled line cards. All line cards use the 32 Gbps shared bus when the Supervisor Engine 32 is deployed with the 6500 switch.

Switch Performance (Gbps) = Supervisor Engine + MSFC + PFC + Fabric Enabled DFC Line Card + Line Card Oversubscription

Switch Forwarding Rate and Throughput

The forwarding rate of packets is specified as packets per second (pps) and describes the aggregate number of packets the switch forwards per second. The packet size affects the forwarding rate for any network device. As the packet size increases the forwarding rate (packets per second) decreases. The advantage of larger packets is increased throughput (bps), packet efficiency and decreased switch utilization. It is easier to forward fewer larger packets than multiple smaller packets. Some network switches can forward packets at wire rate per switch port. Example 1 calculates minimum throughput (bps) and maximum forwarding rate for the Cisco 4948 server farm switch that forwards at wire speed rate of 72 Mpps for the minimum packet size of 84 bytes. The wire speed calculation is as follows.

Example 1 Calculating Switch Forwarding Rate and Throughput

switch port forwarding rate = port speed / (8 bits/byte x 84 byte packet)

= 1000 Mbps (GE) / (8 bits/byte x 84 bytes)

= 1,000,000,000 bps / (8 bite/byte x 84 bytes)

= 1.5 Mpps

switch chassis forwarding rate = 1.5 Mpps x 48 Gigabit ports (1000 Mbps)

= 72 Mpps

switching throughput capacity = 48 GE ports x 1000 Mbps

= 48 Gbps x 2 (full-duplex)

= 96 Gbps

Cisco uses 84 byte packet size for performance testing and rating of devices. The average packet size is typically much larger than 84 bytes. The theoretical throughput between devices is limited by the average packet size and TCP window size.

Switch Architecture

Cisco switches are defined according to various hardware classes with features that determine where the switch is deployed. Those features include throughput, modularity, network layer, hardware modules and redundancy.

Access Switches

The access switch is used for desktop and server connectivity at the access layer. The most popular switches being deployed today for desktop connectivity include the Cisco 3750 and 3560 series switches. The 3750X is a fixed 48 port Gigabit switch with uplink modules that have Gigabit and 10 Gigabit ports. There are as well some 3750 models with 24 Gigabit ports. There are no additional line card options available when additional switch ports are required. The 3750 and 3560 network switches are used for desktop connectivity and deployed in the wiring closet. The fixed module switches don't use a Supervisor Engine and often have power supply redundancy as an option. The data center server farm access switch is the Cisco 4900M. It has a modular architecture with a variety of Gigabit and 10 Gigabit line cards and port counts.

Cisco 3560-X Series Switch

• Fixed Architecture

• Access Layer (Wiring Closet)

• 24/48 x GE Port Models

• Not Stackable

• GE/10 GE Uplink Network Modules

• Redundant 1100W Power Supplies

• Switching Capacity: Switch Fabric is 160 Gbpsv

• Forwarding Rate: 101.2 Mpps (48 Port) / 65.5 Mpps (24 Port)

Cisco 3750-X Series Switch

• Fixed Architecture

• Access Layer (Wiring Closet)

• 24/48 x GE Port Models

• Stackable

• GE/10 GE Uplink Network Modules

• Redundant 1100W Power Supplies

• Switching Capacity: Switch Fabric is 160 Gbps

• Forwarding Rate: 101.2 Mpps (48 Port), 65.5 Mpps (24 Port)

Cisco 4900M Series Switch

The Cisco 4900 series switches are designed specifically for the data center server farm. They are an access switch that connect to distribution switches. The Cisco models available include 4948, 4948-10GE, 4948E, 4928 and 4900M. The switches all offer varying network port modules and throughput ratings. The Cisco 4900M switch has the highest throughput and number of GE and 10 GE switch ports available.

• Modular Architecture

• Server Farm

• 20/32/40 x GE Port Models

• 4/8 x 10 GE Port Models

• Redundant 1000W Power Supplies

• Switching Capacity: 320 Gbps

• Forwarding Rate: 250 Mpps

Cisco 4510R+E Switch

The Cisco 4500 switch is typically deployed as a distribution layer switch at larger branch offices and smaller data centers. The VSS feature will be available during 2012. There are multiple Supervisor Engines and line cards available with the 4500 series switches. The line cards are a mix of various GE and 10 GE interfaces. The Supervisor Engine and line card support is chassis specific. The 4500 series Supervisor Engine throughput varies according to model.

• Modular Architecture

• Distribution Layer

• 10 Chassis Slots

• 384 x GE Ports

• 104 x 10 GE Ports

• Redundant Supervisor Engines

• Supervisor Engine Support: V-10GE, 6E, 7E

• 9000W Redundant Power Supplies

• Switching Capacity: 848 Gbps (7E)

• Forwarding Rate: 250 Mpps (7E)

Cisco 6513E Switch

The newer Cisco 6500E (enhanced chassis) includes some improvements such as faster Supervisor Engine failover time of 50 msec to 200 msec with the Supervisor Engine 720 and 2T. There are four 6500E series switch chassis available including the 6503E, 6506E, 6509E and 6513E. Each chassis has a specific number of slots available. The newer 6513E chassis with Supervisor Engine 720, VS-S720, VS-S2T-10G and VS-S2T-10G-XL support the noted forwarding rate with dCEF720 line cards.

• Modular Architecture

• Distribution/Core Layer

• 13 Slot Chassis

• 576 x GE Ports

• 176 x 10 GE Ports

• 44 x 40 GE Ports

• Redundant Supervisor Engines

• Supervisor Engine Support: 1A, 2, 32, 720, VS-S720, VS-S2T

• Service Modules

• Redundant 8700W Power Supplies

• Chassis Switching Capacity: 4 Tbps (VS-S2T)

• Chassis Forwarding Rate: 720 Mpps (VS-S2T)

Cisco Nexus 7018

The Cisco Nexus switches are the fastest distribution and core switches available from Cisco. They are typically used at data centers and service provider core switching network. The four Nexus models available include 7004, 7009, 7010 and 7018 chassis. The Supervisor Engine 1, 2 and 2E are specific to the Nexus switches and can only be used with them.

• Modular Architecture

• Distribution/Core Layer

• 18 Slot Chassis

• 768 x GE/10 GE Ports

• 96 x 40 GE Ports

• 32 x 100 GE Ports

• Redundant Supervisor Engines

• Supervisor Engine Support: 1, 2, 2E

• 4 x 7500W Power Supplies

• Slot Switching Capacity is 550 Gbps

• Chassis Switching Capacity: 18.7 Tbps

• Chassis Forwarding Rate: 11,520 Mpps

Multilayer Switch Feature Card (MSFC)

The Multilayer Switch Feature Card (MSFC) is a module onboard the Cisco 6500 Supervisor Engine for the purpose of managing control plane traffic. The MSFC has a route processor (RP) and a switch processor (SP) that process switches network control traffic. The route processor manages Layer 3 processes in software such as building the routing table, neighbor peering, route redistribution and first packet routing. In addition some services such as network address translation (NAT), GRE, encryption, and ARP must be done in software meaning they cannot be hardware switched on the PFC. The switch processor manages Layer 2 control protocols. This includes protocols such as STP, VTP, CDP, PAgP, LACP and UDLD. The MSFC3 is integrated with the Supervisor Engine 720 and has a process switching capacity of 500 Kbps.

The MSFC builds the routing table, derives the FIB and then pushes it down to the PFC and DFC line card modules if deployed. The FIB is an optimized routing table built for high speed switching of packets in hardware. There is an adjacency table derived from the FIB and ARP table with Layer 2 forwarding information similar to that of the CAM table. Anytime there is a routing or topology change the routing table updates the FIB and pushes those changes to all PFC and DFC modules.

Policy Feature Card (PFC)

The Policy Feature Card is a hardware switching module implemented to the Cisco 6500 series switch for data plane forwarding of packets. It is comprised of a Layer 2 and a Layer 3 forwarding engine. The packets are switched in hardware with high speed ASICs using lookups from a forwarding information base (FIB) and adjacency table.

The FIB is an optimized routing table built by the MSFC and pushed down to the PFC Layer 3 engine for Layer 3 forwarding of packets. The adjacency table is derived from the FIB and ARP table that has Layer 2 forwarding information. The DFC on the switch line card when deployed is essentially the same as the PFC with all the same features. The PFC uses multiple TCAM tables for high speed Layer 2 and Layer 3 lookups along with security ACL and QoS ACL lookups in addition to NetFlow counters.

Switching Fabric

The Cisco switch uses a 32 Gbps shared bus designed for older classic line cards that are not fabric enabled. The standalone SFM then became available with the Supervisor Engine 2 increasing bandwidth to 256 Gbps full-duplex switching capacity using a crossbar fabric. The crossbar fabric uses 18 discrete channels between all line cards and the Supervisor Engine. The Supervisor Engine 720 has the crossbar switch fabric onboard instead of as a standalone module, increasing the full-duplex bandwidth switching capacity to 720 Gbps.

Shaun Hummel is the author of Network Performance and Optimization Guide

More Networking Topologies Articles:

• Computer Networking Devices

• Routing Technologies, Bandwidth Management and Traffic Shaping

• Distance Vector vs. Link State vs. Hybrid Routing

• Beginners Guide to Fiber Optic Bit Error Ratio (BER) Measurement

• Overview of IEEE 802.11 Wireless Lan Technology

• Voice Over IP Protocols and Components

• Network Topologies

• Understanding Basic Terms in Indoor Fiber Optic Cable Installation

• Introduction to SONET (Synchronous Optical Networking)

• Wireless Networks