Earlier in the history of computer design, in response to the need for more computing performance, single-CPU systems evolved into multi-CPU systems. A more recent similar trend in system design is to place multiple computing cores on a single chip. Each core appears as a separate processor to the operating system. Whether the cores appear across CPU chips or within CPU chips, we call these multicore or multiprocessor systems.

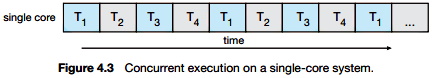

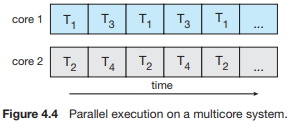

Multithreaded programming provides a mechanism for more efficient use of these multiple computing cores and improves concurrency. Consider an application with four threads. On a system with a single computing core, concurrency merely means that the execution of the threads will be interleaved over time (Figure 4.3), because the processing core is capable of executing only one thread at a time. On a system with multiple cores, however, concurrency means that the threads can run in parallel, because the system can assign a separate thread to each core (Figure 4.4).

Notice the distinction between parallelism and concurrency in this discussion. A system is parallel if it can perform more than one task simultaneously. In contrast, a concurrent system supports more than one task allowing all the tasks to make progress. Thus, it is possible to have concurrency without parallelism. Before the advent of SMP and multicore architectures, most computer systems had only a single processor. CPU schedulers were designed to provide the illusion of parallelism by rapidly switching between processes in the system, thereby allowing each process to make progress. Such processes were running concurrently, but not in parallel.

As systems have grown from tens of threads to thousands of threads, CPU designers have improved system performance by adding hardware to improve thread performance. Modern Intel CPUs frequently support two threads per core, while the Oracle T4 CPU supports eight threads per core. This support means that multiple threads can be loaded into the core for fast switching. Multicore computers will no doubt continue to increase in core counts and hardware thread support.

About the Authors

Abraham Silberschatz is the Sidney J. Weinberg Professor of Computer Science at Yale University. Prior to joining Yale, he was the Vice President of the Information Sciences Research Center at Bell Laboratories. Prior to that, he held a chaired professorship in the Department of Computer Sciences at the University of Texas at Austin.

Professor Silberschatz is a Fellow of the Association of Computing Machinery (ACM), a Fellow of Institute of Electrical and Electronic Engineers (IEEE), a Fellow of the American Association for the Advancement of Science (AAAS), and a member of the Connecticut Academy of Science and Engineering.

Greg Gagne is chair of the Computer Science department at Westminster College in Salt Lake City where he has been teaching since 1990. In addition to teaching operating systems, he also teaches computer networks, parallel programming, and software engineering.

Operating System Concepts, now in its ninth edition, continues to provide a solid theoretical foundation for understanding operating systems. The ninth edition has been thoroughly updated to include contemporary examples of how operating systems function. The text includes content to bridge the gap between concepts and actual implementations. End-of-chapter problems, exercises, review questions, and programming exercises help to further reinforce important concepts. A new Virtual Machine provides interactive exercises to help engage students with the material.

Reader Adam Sinclair says, "I'm writing this review from the perspective of a student. I am finishing an Operating Systems course at university and I have to say this book is fantastic at introducing new concepts. If there is ever a conversation about OS, I always refer to this book. The content is very well laid out and organized in a way that can be read from beginning to end. There is no need to jump from one chapter to another (unless you want to skip sections)."

Reader Chetan Sharma says, "This book is bible for operating system knowledge. It covers very important concepts of Process Management and Memory Management. This book is good for all type of readers - Beginner, Intermediate and Advanced reader. Highly recommended for Students/Professionals/Readers who want to enhance their knowledge.

More Computer Architecture Articles:

• Microprocessor Registers

• Microcontroller Architectures

• Windows Operating System Services, Functions, Routines, Processes, Threads, and Jobs

• Processor Affinity in Symmetric Multiprocessing

• Stored Program Architecture

• Expanding the Resources of Microcontrollers

• Microcontroller Registers

• Introduction to Microprocessor Programming

• Basic Electronics for Computer Architecture

• Simplified Windows Architecture Overview